Python API

You can use polarityjam within python to do your analysis. A good tool to get an impression for the data you are about to analyse is jupyter notebook https://jupyter.org/). Paired with python anaconda it is a powerful option. The following will show how to use polarityjam in a python shell, or preferably in a jupyter notebook. What follows is an example of such a notebook. It can be found here!

Lets first look at the API. There is four major parts to it: - Parameter - Segmentation - Extraction - Visualization

We will walk you through each of them in here. First, familiarize with the API structure.

[1]:

# must run in an environment where polarityJaM is installed

from polarityjam import Extractor, Plotter, PropertiesCollection

from polarityjam import RuntimeParameter, PlotParameter, SegmentationParameter, ImageParameter

from polarityjam import PolarityJamLogger

from polarityjam import load_segmenter

from polarityjam.utils.io import read_image

from pathlib import Path

[2]:

# Setup a logger to only report WARNINGS, Put "INFO" or "DEBUG" to get more information

plog = PolarityJamLogger("WARNING")

Parameter

First, lets setup the data we want to use and the parameters describing what we want to do. You find the images in our example dataset that are part of polarityJaM. Here is the link to the example data! Simply extract the zip and have it in the same directory as this notebook.

[3]:

### ADAPT ME ###

path_root = Path("")

input_file = path_root.joinpath("data/golgi_nuclei/set_2/060721_EGM2_18dyn_02.tif")

output_path = path_root.joinpath("polarityjam_out/")

output_file_prefix = "060721_EGM2_18dyn_02"

### ADAPT ME ###

An image can be read in and needs to be further specified which channels hold what kind of information. For that the ImageParameter class should be used.

[4]:

# read input

img = read_image(input_file)

# describe your image with ImageParameter

params_image = ImageParameter()

# set the channels

params_image.channel_organelle = 0 # golgi channel

params_image.channel_nucleus = 2

params_image.channel_junction = 3

params_image.channel_expression_marker = 3

params_image.pixel_to_micron_ratio = 2.4089

print(params_image)

ImageParameter:

channel_junction 3

channel_nucleus 2

channel_organelle 0

channel_expression_marker 3

pixel_to_micron_ratio 2.4089

Other parameters that should now be specified are RuntimeParameter, PlotParameter.

[5]:

# define other parameters, use default values

params_runtime = RuntimeParameter()

params_plot = PlotParameter()

Let’s look at our parameter default values:

[6]:

print(params_runtime)

print(params_plot)

RuntimeParameter:

extract_group_features False

membrane_thickness 5

feature_of_interest cell_area

min_cell_size 50

min_nucleus_size 10

min_organelle_size 10

dp_epsilon 5

cue_direction 0

connection_graph True

segmentation_algorithm CellposeSegmenter

remove_small_objects_size 10

clear_border True

save_sc_image False

keyfile_condition_cols ['short_name']

PlotParameter:

plot_junctions True

plot_polarity True

plot_elongation True

plot_circularity True

plot_marker True

plot_ratio_method True

plot_shape_orientation True

plot_foi True

plot_sc_image False

outline_width 2

show_polarity_angles True

show_graphics_axis False

pixel_to_micron_ratio 1.0

plot_scalebar True

length_scalebar_microns 20.0

plot_statistics None

graphics_output_format ['png']

dpi 300

graphics_width 5

graphics_height 5

membrane_thickness 5

fontsize_text_annotations 6

font_color w

marker_size 2

Changing an of these is easy - and can be done as shown below:

[7]:

# change some parameters

params_runtime.membrane_thickness = 6

params_runtime.min_organelle_size = 9

to see all possible parameters and what values they can take see the Usage section of the documentation.

All parameters from single file

Because there are a lot of parameters to set, we can also load all parameters from a single file. For every parameter that is not content of this file the default value is automatically taken. The code would look like this:

Note

Order of your notebook cells matters! Parameters defined in previous cells could be overwritten by the parameters defined in the yml-file.

[ ]:

### CHANGE ME ###

path_to_yml = "/path/to/your/yml/file.yml"

### CHANGE ME ###

params_image = ImageParameter.from_yml(path_to_yml)

params_runtime = RuntimeParameter.from_yml(path_to_yml)

params_plot = PlotParameter.from_yml(path_to_yml)

params_seg = SegmentationParameter.from_yml(path_to_yml) # default segmentation algorithm is used if not specified in yml

Segmentation

Before features can be extracted the image must be segmented. A segmentation procedure in polarityJaM consists of two steps. First, the image is prepared for segmentation. Second, the segmentation algorithm is applied to the prepared image.

You can specify the segmentation algorithm in the RuntimeParameter. Here, you can change the value of segmentation_algorithm if you want to use a different algorithm. Default value is CellposeSegmenter and hence the cellpose segmentation algorithm will be used.

[8]:

print("Used algorithm for segmentation: %s " % params_runtime.segmentation_algorithm)

Used algorithm for segmentaion: CellposeSegmenter

[9]:

# Now define your segmenter and segment your image with the default algorithm and default parameters.

cellpose_segmentation, _ = load_segmenter(params_runtime)

# prepare your image for segmentation

img_prepared, img_prepared_params = cellpose_segmentation.prepare(img, params_image)

[10]:

# Define a plotter and check your for segmentation prepared image

plotter = Plotter(params_plot)

# plot input

plotter.plot_channels(img_prepared, img_prepared_params, output_path, input_file);

[11]:

# now segment your prepared image to get the masks

mask = cellpose_segmentation.segment(img_prepared, input_file)

# plot segmentation mask to check the quality

plotter.plot_mask(mask, img_prepared, img_prepared_params, output_path, output_file_prefix);

Change segmentation parameters

To specify the parameters of the segmentation algorithm, you can use the SegmentationParameter class. But if not defined otherwise, the segmentation algorithm is used with its default parameters. Parameter that are of no use for the segmentation algorithm are ignored during segmentation, but no error is thrown. Make sure to check the parameters of the segmentation algorithm you are using.

[12]:

# define your segmentation parameters by starting with the default parameters

cellpose_parameter = SegmentationParameter(params_runtime.segmentation_algorithm)

# change specific parameters

cellpose_parameter.estimated_cell_diameter = 300

print(cellpose_parameter)

SegmentationParameter:

path cellpose.CellposeSegmenter

manually_annotated_mask

store_segmentation False

use_given_mask True

model_type cyto

model_type_nucleus nuclei

model_path

estimated_cell_diameter 300

estimated_nucleus_diameter 30

flow_threshold 0.4

cellprob_threshold 0.0

use_gpu False

[13]:

# now lets load the segmenter with the new parameters

cellpose_segmentation_altered_params, _ = load_segmenter(params_runtime, cellpose_parameter)

# using this `cellpose_segmentation_altered_params` to prepare your image and segment it would now use the altered parameters!

# img_prepared_altered, _ = cellpose_segmentation_altered_params.prepare(img, params_image)

# mask_altered = cellpose_segmentation.segment(img_prepared_altered, input_file)

Change segmentation algorithm

You might notice that the segmentation algorithm you are using performs poor on your data. Try different segmentation algorithms to find the one that works best for you.

For a list of included segmentation algorithms please look at the Usage page of the documentation.

We now show you how you can change the segmentation algorithm:

Note

Different segmentation algorithms might need different parameters. Make sure to check the parameters of the segmentation algorithm you are using.

[14]:

params_runtime.segmentation_algorithm = "DeepCellSegmenter"

deepcell_segmenter_parameters = SegmentationParameter(params_runtime.segmentation_algorithm)

deepcell_segmenter_parameters.segmentation_mode = "nuclear"

print(deepcell_segmenter_parameters)

SegmentationParameter:

path deepcell.DeepCellSegmenter

segmentation_mode nuclear

save_mask False

We changed the segmentation algorithm to DeepCell and the mode to “nuclear” to get a nucleus segmentation instead of a whole cell segmentation since Cellpose does already a great job. Next steps are now analog to segmenting cells: prepare and segment your image!

Note

When you use a new segmentation algorithm for the first time, it needs to be installed. This can take a while. Please be patient.

[15]:

### This might take a while ###

deepcell_segmentation, _ = load_segmenter(params_runtime, deepcell_segmenter_parameters)

# again, prepare your image for segmentation

img_prepared_deepcell, img_prepared_deepcell_params = deepcell_segmentation.prepare(img, params_image)

# again, segment your prepared image to get the instance masks - this time using the DeepCellSegmenter

mask_deepcell = deepcell_segmentation.segment(img_prepared_deepcell, input_file);

### This might take a while ###

13:35:37 INFO ~ Starting DeepCell-predict

13:35:40 INFO ~ 2023-10-18 13:35:40.332057: I tensorflow/stream_executor/cuda/cuda_gpu_executor.cc:936] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero

13:35:40 INFO ~ 2023-10-18 13:35:40.332442: I tensorflow/stream_executor/cuda/cuda_gpu_executor.cc:936] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero

13:35:40 INFO ~ 2023-10-18 13:35:40.360217: W tensorflow/stream_executor/platform/default/dso_loader.cc:64] Could not load dynamic library 'libcudnn.so.8'; dlerror: libcudnn.so.8: cannot open shared object file: No such file or directory; LD_LIBRARY_PATH: /home/jpa/.album/lnk/env/14/lib/python3.9/site-packages/cv2/../../lib64:/home/jpa/anaconda3/envs/polarityjam/lib/python3.8/site-packages/cv2/../../lib64:/usr/local/cuda-11/lib64:/lib/

13:35:40 INFO ~ 2023-10-18 13:35:40.360241: W tensorflow/core/common_runtime/gpu/gpu_device.cc:1850] Cannot dlopen some GPU libraries. Please make sure the missing libraries mentioned above are installed properly if you would like to use GPU. Follow the guide at https://www.tensorflow.org/install/gpu for how to download and setup the required libraries for your platform.

13:35:40 INFO ~ Skipping registering GPU devices...

13:35:40 INFO ~ 2023-10-18 13:35:40.360494: I tensorflow/core/platform/cpu_feature_guard.cc:151] This TensorFlow binary is optimized with oneAPI Deep Neural Network Library (oneDNN) to use the following CPU instructions in performance-critical operations: AVX2 FMA

13:35:40 INFO ~ To enable them in other operations, rebuild TensorFlow with the appropriate compiler flags.

13:35:45 INFO ~ WARNING:tensorflow:No training configuration found in save file, so the model was *not* compiled. Compile it manually.

13:35:45 INFO ~ Image resolution the network was trained on: 0.5 microns per pixel

13:35:49 INFO ~ Segmentation saved to: /tmp/tmpqo_7hejd/segmentation_segmentation

13:35:49 INFO ~ Finished DeepCell-predict

[16]:

# plot segmentation mask to check the quality

plotter.plot_mask(mask, img_prepared_deepcell, img_prepared_deepcell_params, output_path, output_file_prefix, mask_nuclei=mask_deepcell);

Feature extraction

Use the Extractor class to extract the features from your segmentation. Please provide the original image and not the image that is created in the preparation step of the segmentation.

[17]:

# feature extraction

collection = PropertiesCollection()

extractor = Extractor(params_runtime)

extractor.extract(img, params_image, mask, output_file_prefix, output_path, collection, segmentation_mask_nuclei=mask_deepcell);

[18]:

collection.dataset.head()

[18]:

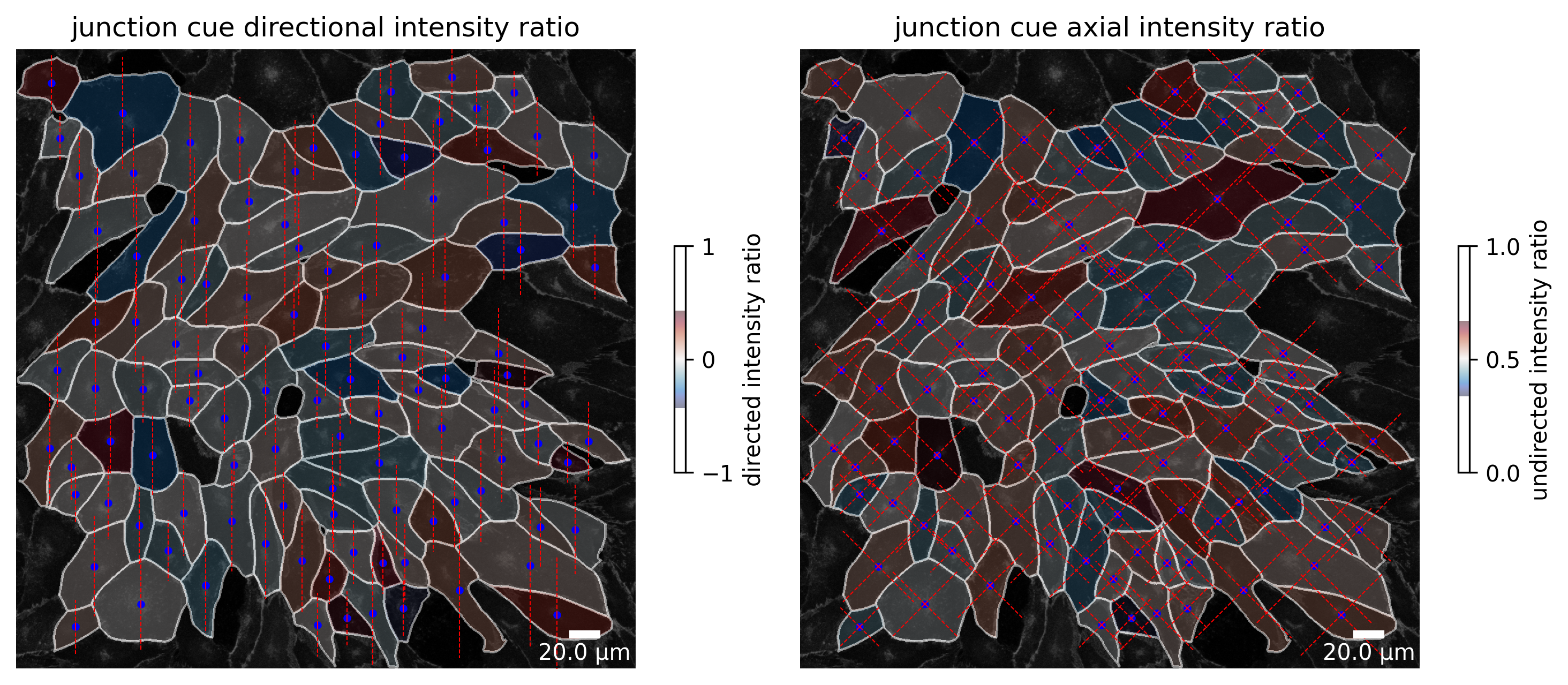

| filename | img_hash | label | cell_X | cell_Y | cell_shape_orientation_rad | cell_shape_orientation_deg | cell_major_axis_length | cell_minor_axis_length | cell_eccentricity | ... | junction_perimeter | junction_protein_area | junction_mean_expression | junction_protein_intensity | junction_interface_linearity_index | junction_interface_occupancy | junction_intensity_per_interface_area | junction_cluster_density | junction_cue_directional_intensity_ratio | junction_cue_axial_intensity_ratio | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 060721_EGM2_18dyn_02 | 363944ca78d06717742fe4dbe142e026e325f48d | 11.0 | 720.508294 | 45.513774 | 3.124644 | 179.028931 | 147.191605 | 60.249653 | 0.912387 | ... | 362.450793 | 2295.0 | 324.822479 | 1.036445e+06 | 1.055778 | 0.591342 | 267.056168 | 451.610016 | 0.109910 | 0.458688 |

| 2 | 060721_EGM2_18dyn_02 | 363944ca78d06717742fe4dbe142e026e325f48d | 12.0 | 58.043105 | 55.697915 | 3.113451 | 178.387603 | 98.194399 | 77.941589 | 0.608247 | ... | 316.977705 | 1789.0 | 247.426178 | 6.332390e+05 | 1.075794 | 0.553699 | 195.988552 | 353.962555 | 0.286112 | 0.551979 |

| 3 | 060721_EGM2_18dyn_02 | 363944ca78d06717742fe4dbe142e026e325f48d | 13.0 | 619.535898 | 69.791661 | 2.815969 | 161.343146 | 102.410029 | 88.514931 | 0.502944 | ... | 337.912734 | 2077.0 | 265.672974 | 7.358640e+05 | 1.074227 | 0.605893 | 214.662784 | 354.291779 | -0.155887 | 0.607769 |

| 4 | 060721_EGM2_18dyn_02 | 363944ca78d06717742fe4dbe142e026e325f48d | 14.0 | 176.616147 | 104.956727 | 0.408647 | 23.413770 | 185.249457 | 143.634939 | 0.631520 | ... | 610.955411 | 2475.0 | 227.133942 | 1.009919e+06 | 1.078673 | 0.400356 | 163.364451 | 408.048096 | -0.255170 | 0.512134 |

| 5 | 060721_EGM2_18dyn_02 | 363944ca78d06717742fe4dbe142e026e325f48d | 15.0 | 823.013250 | 71.744770 | 2.500474 | 143.266594 | 72.316774 | 51.889923 | 0.696521 | ... | 216.409163 | 1772.0 | 397.937958 | 8.082580e+05 | 1.075565 | 0.796763 | 363.425354 | 456.127533 | -0.001117 | 0.461180 |

5 rows × 64 columns

[19]:

collection.dataset.to_csv(output_path.joinpath('features.csv'))

Visualization

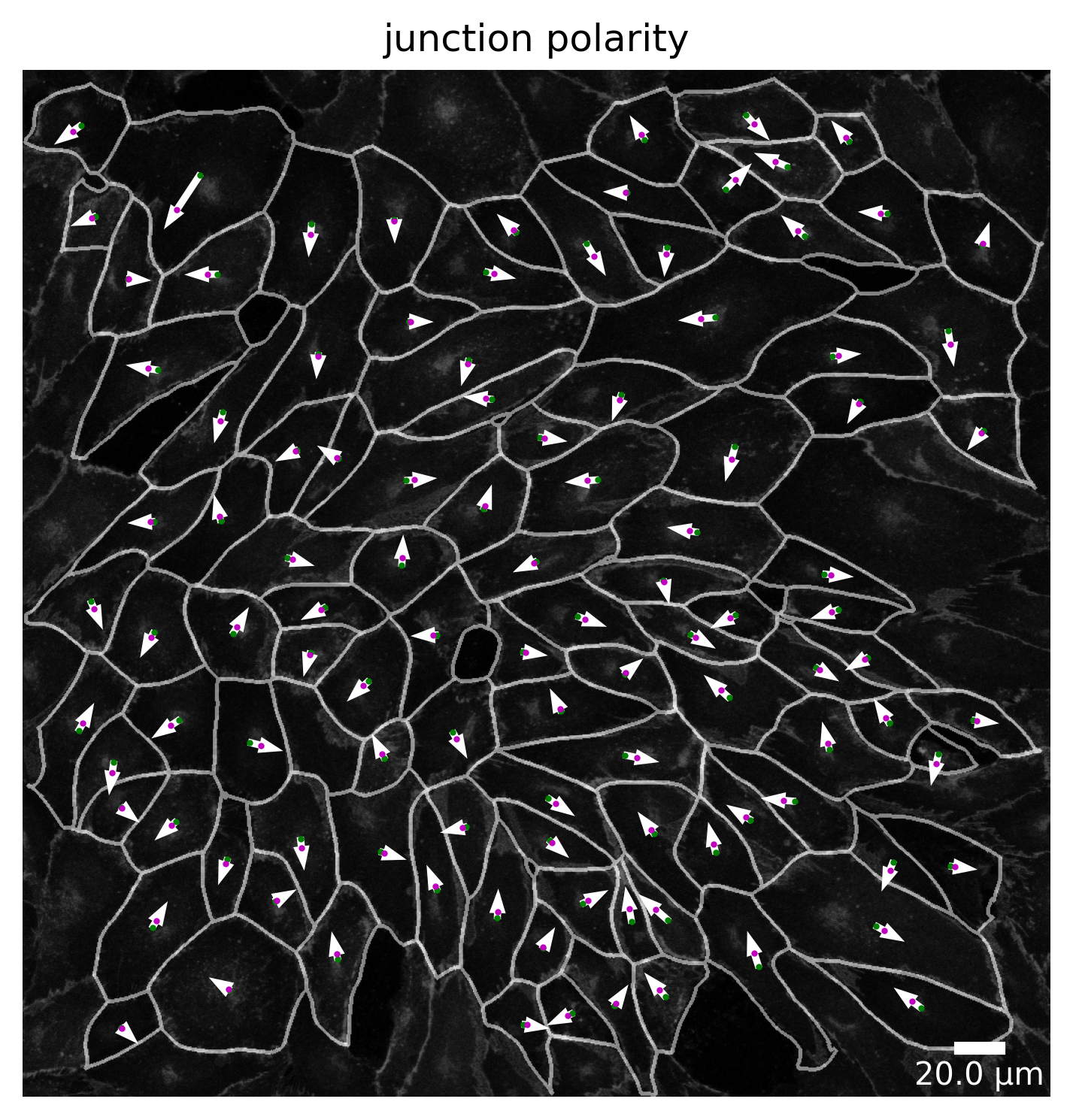

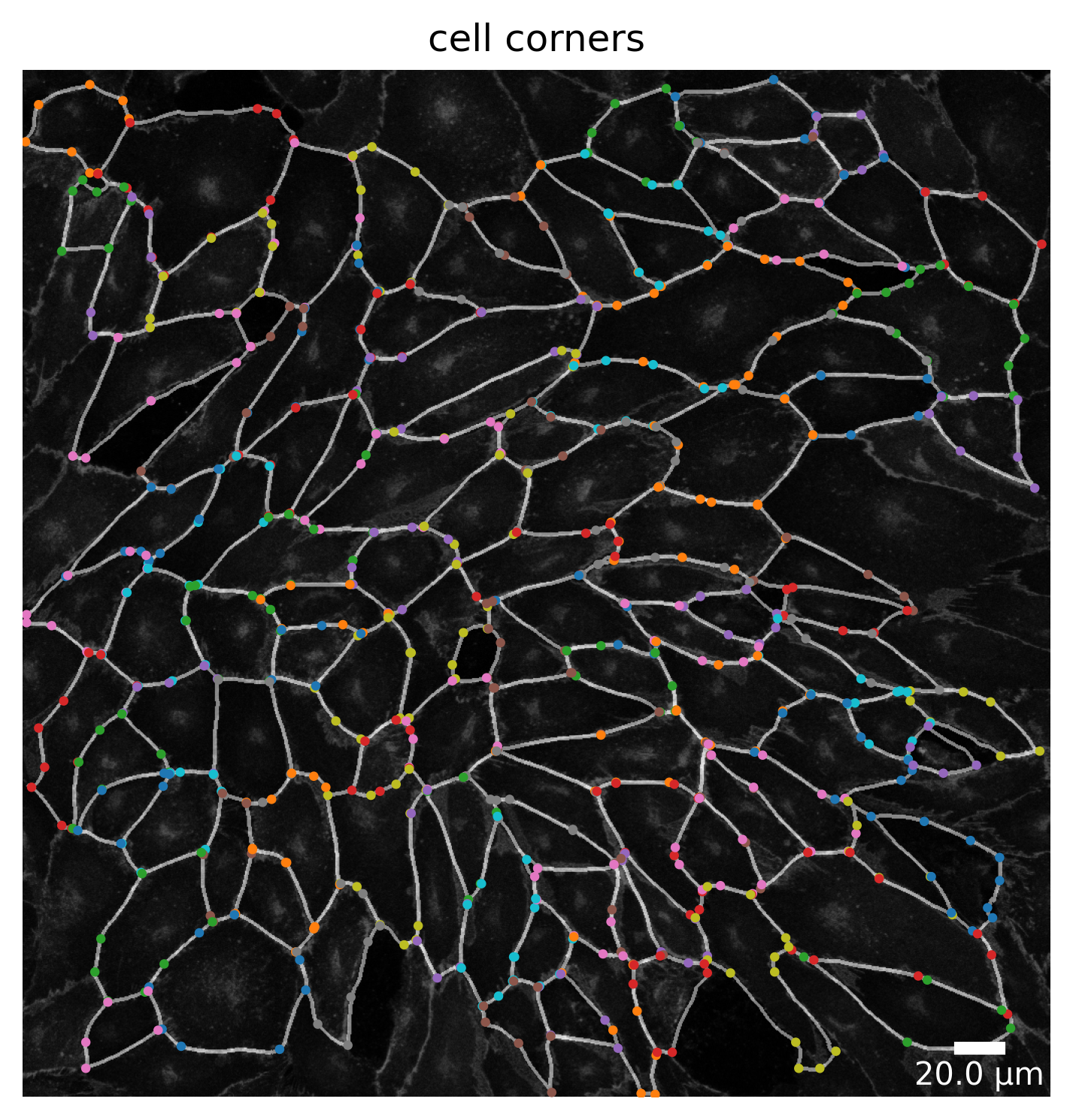

At each point in the analysis process visualization is necessary for quality control! Use the Plotter class to look at processing steps.

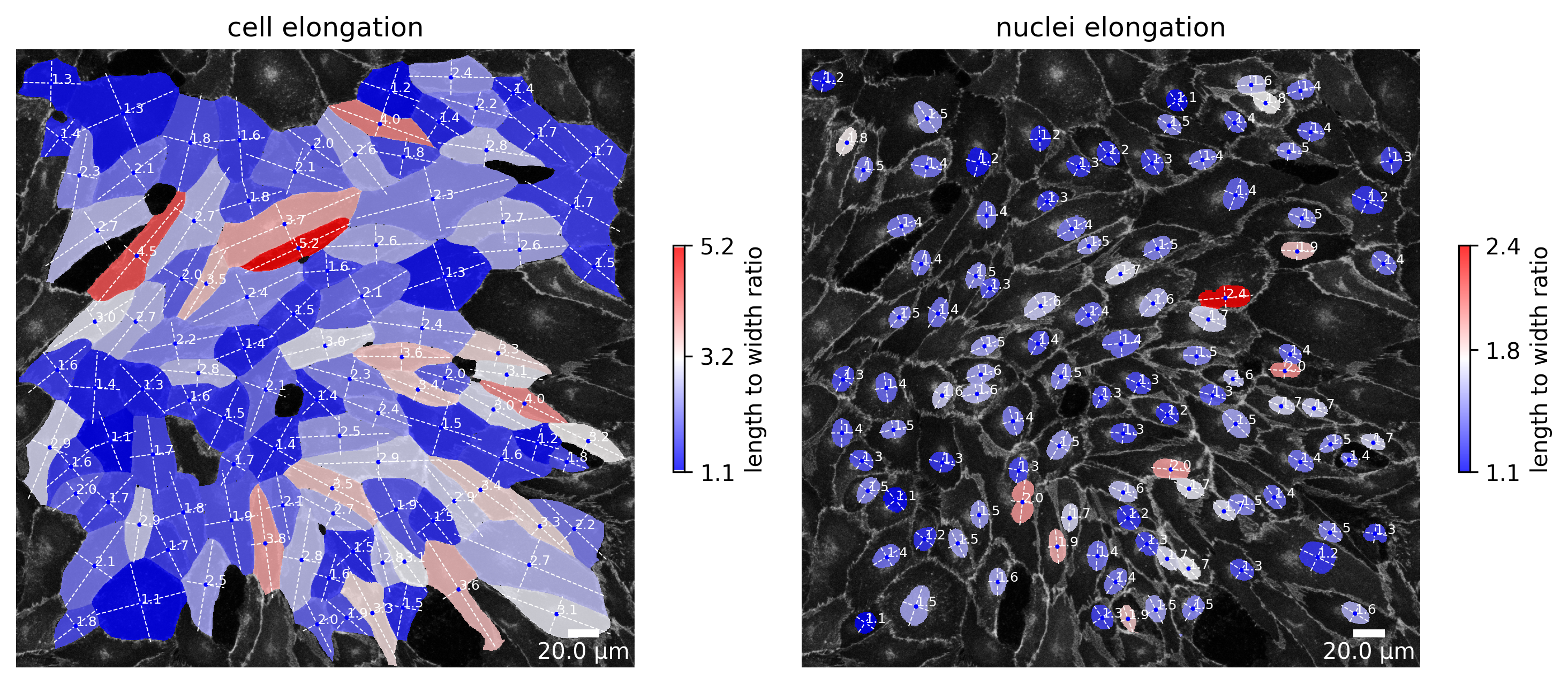

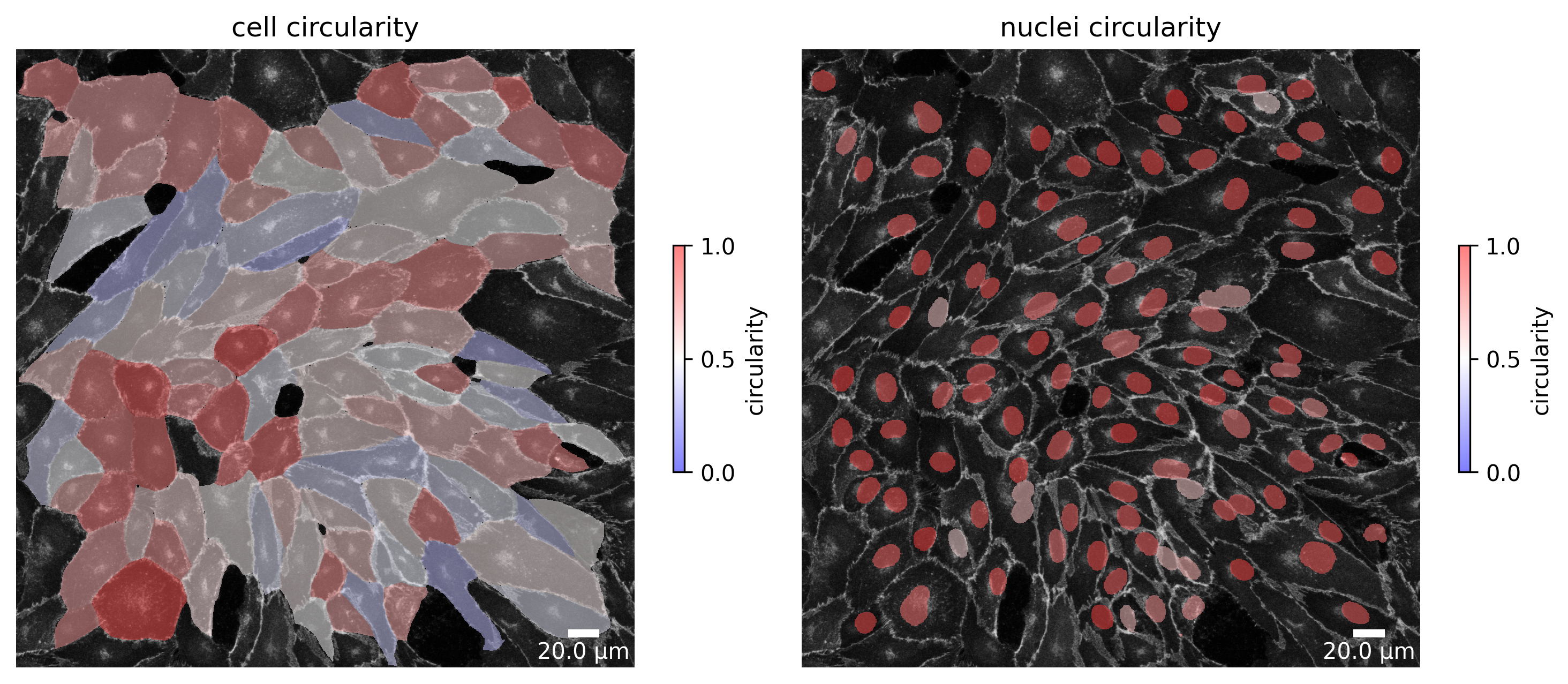

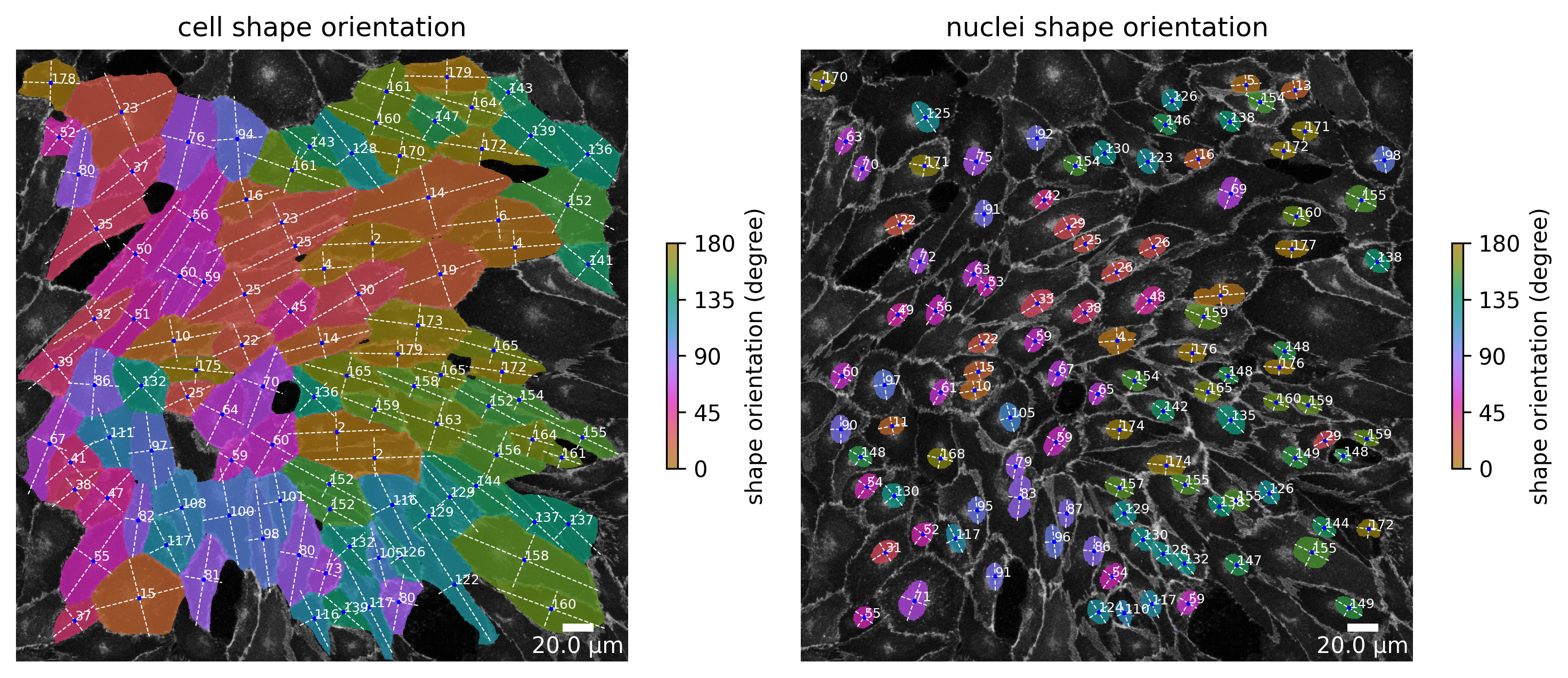

[20]:

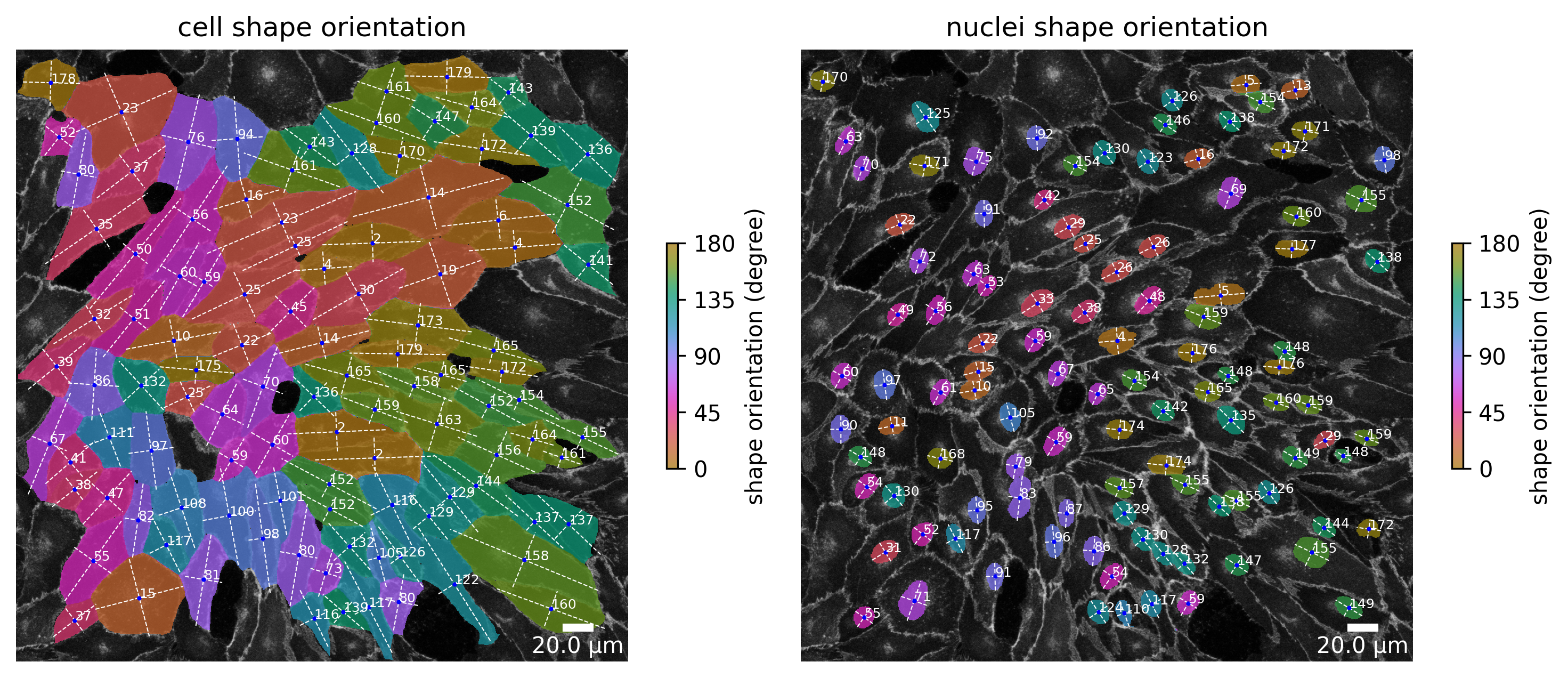

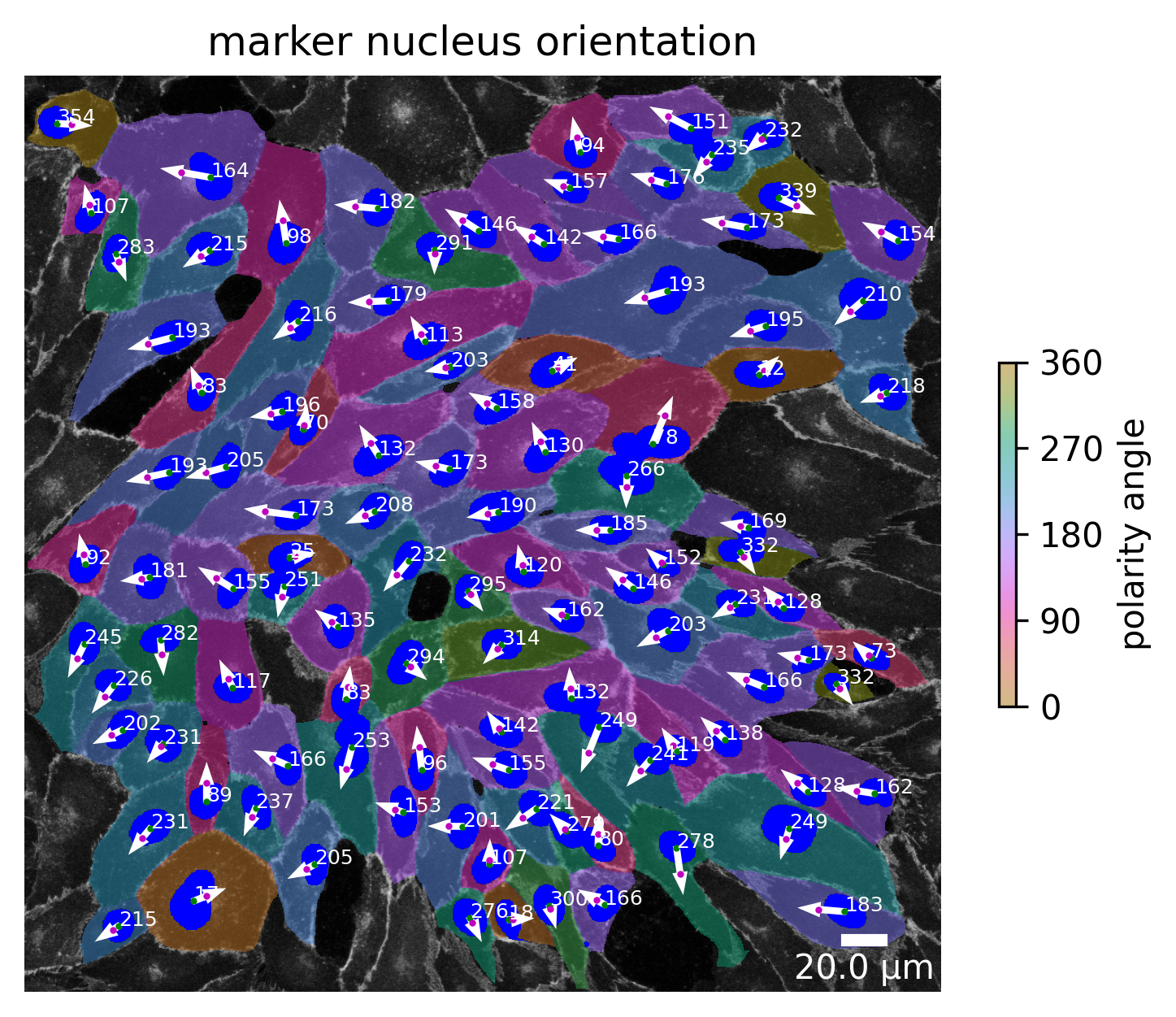

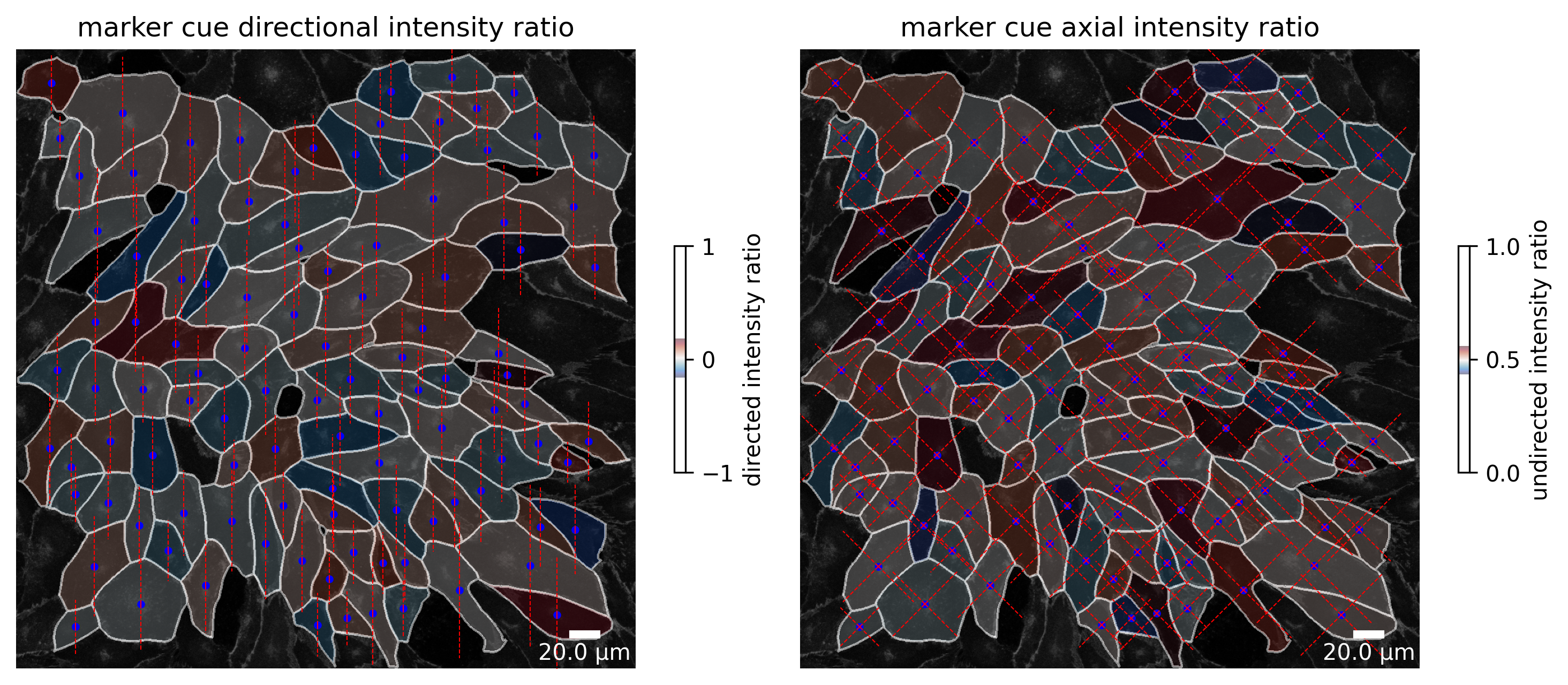

# plot the cell orientation of a specific image in your collection

plotter.plot_shape_orientation(collection, "060721_EGM2_18dyn_02"); # image is automatically saved in output_path

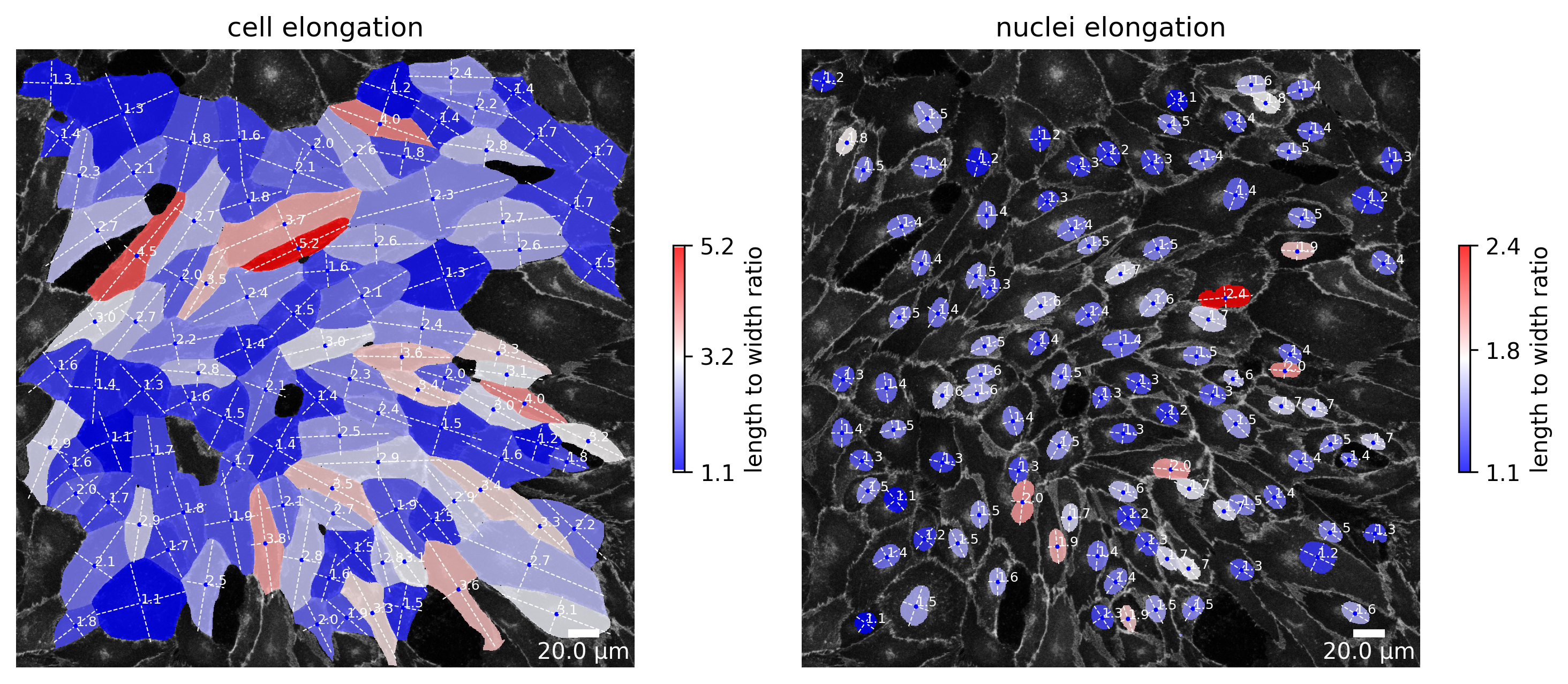

[21]:

plotter.plot_length_width_ratio(collection, "060721_EGM2_18dyn_02");

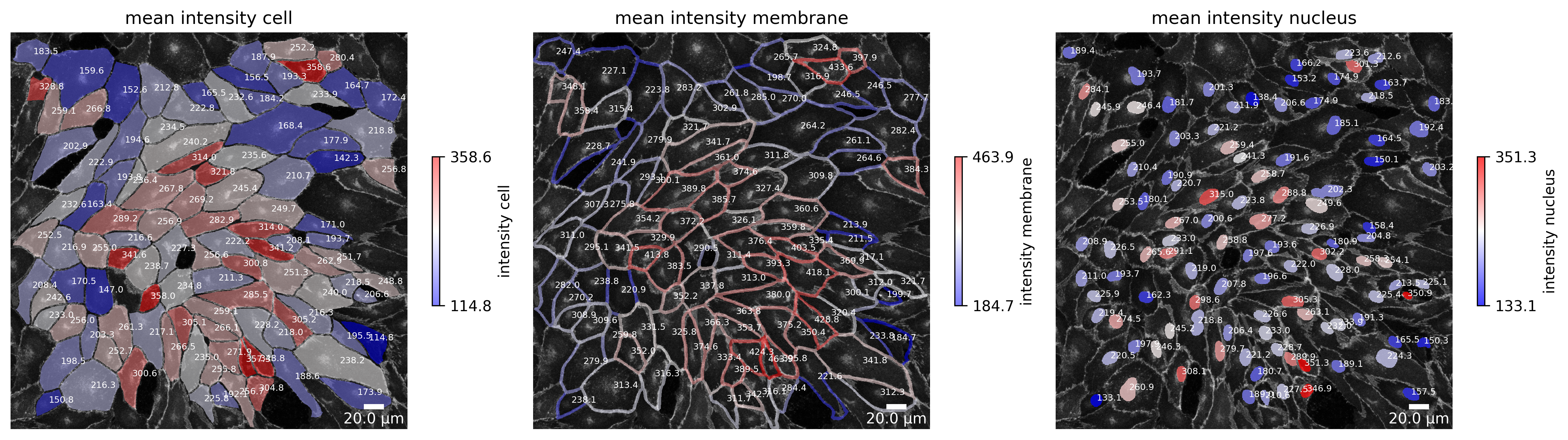

[22]:

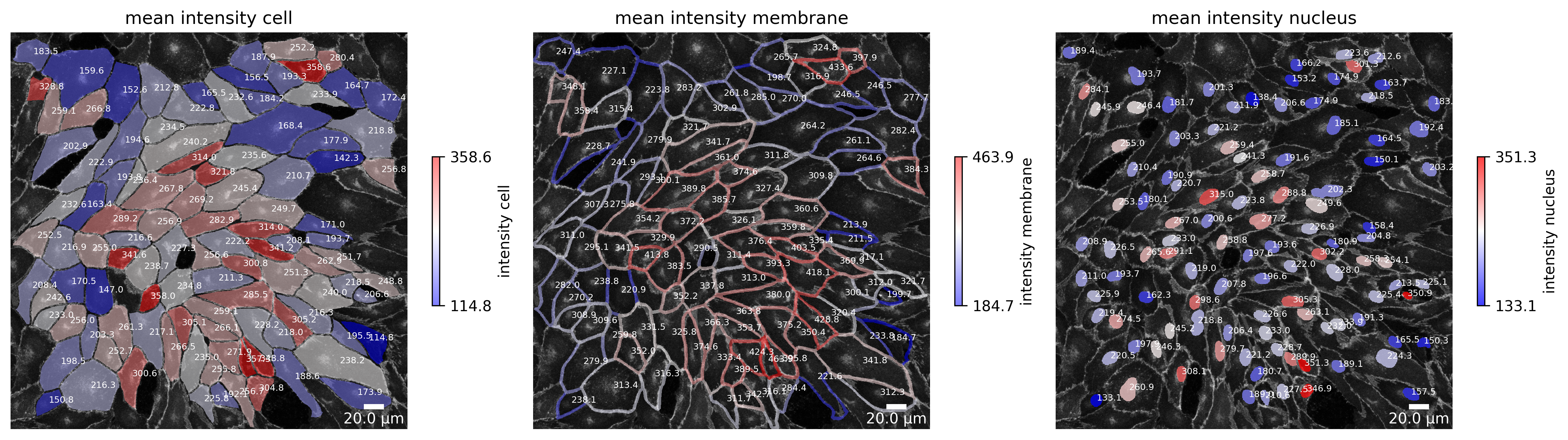

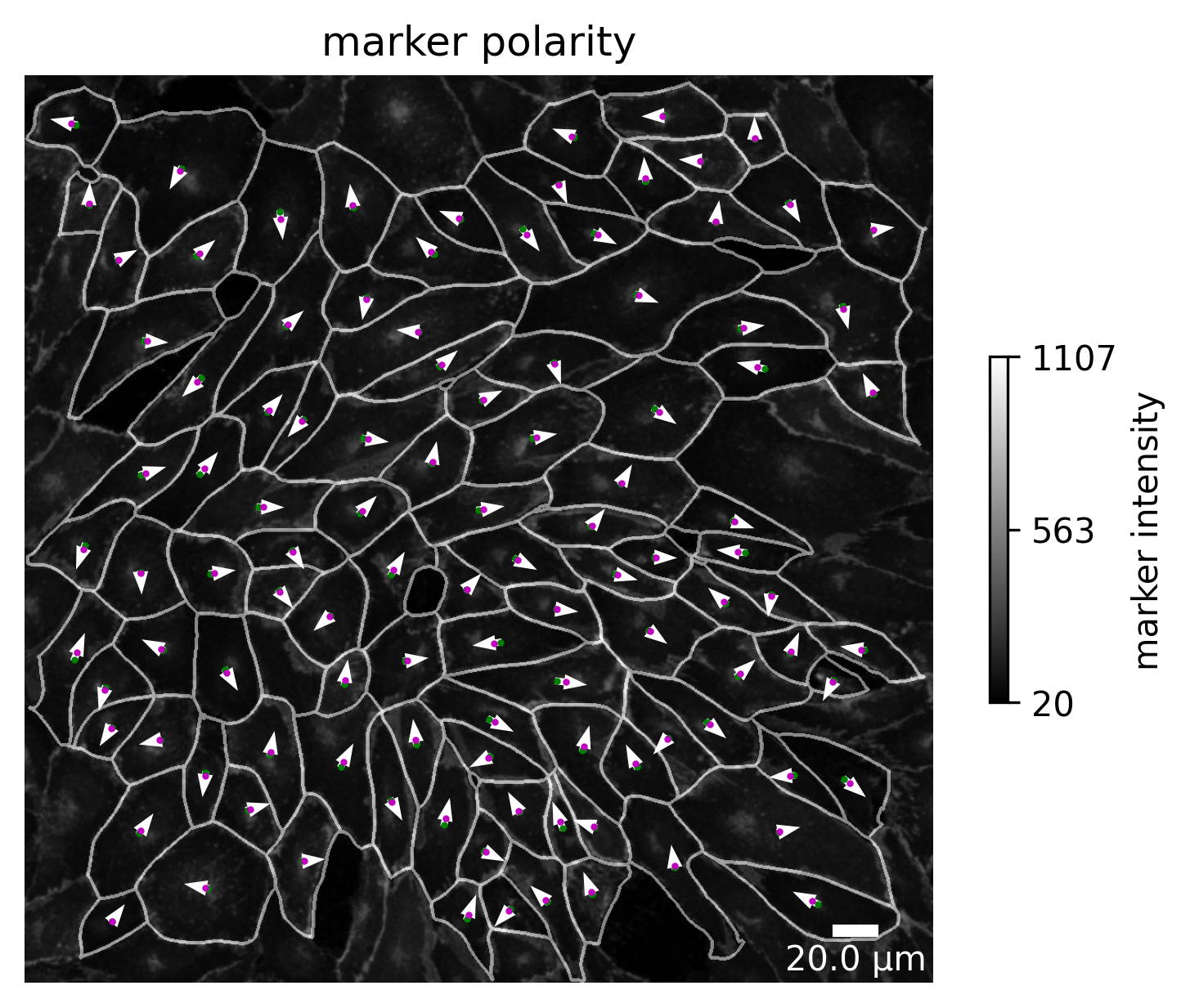

plotter.plot_marker_expression(collection, "060721_EGM2_18dyn_02");

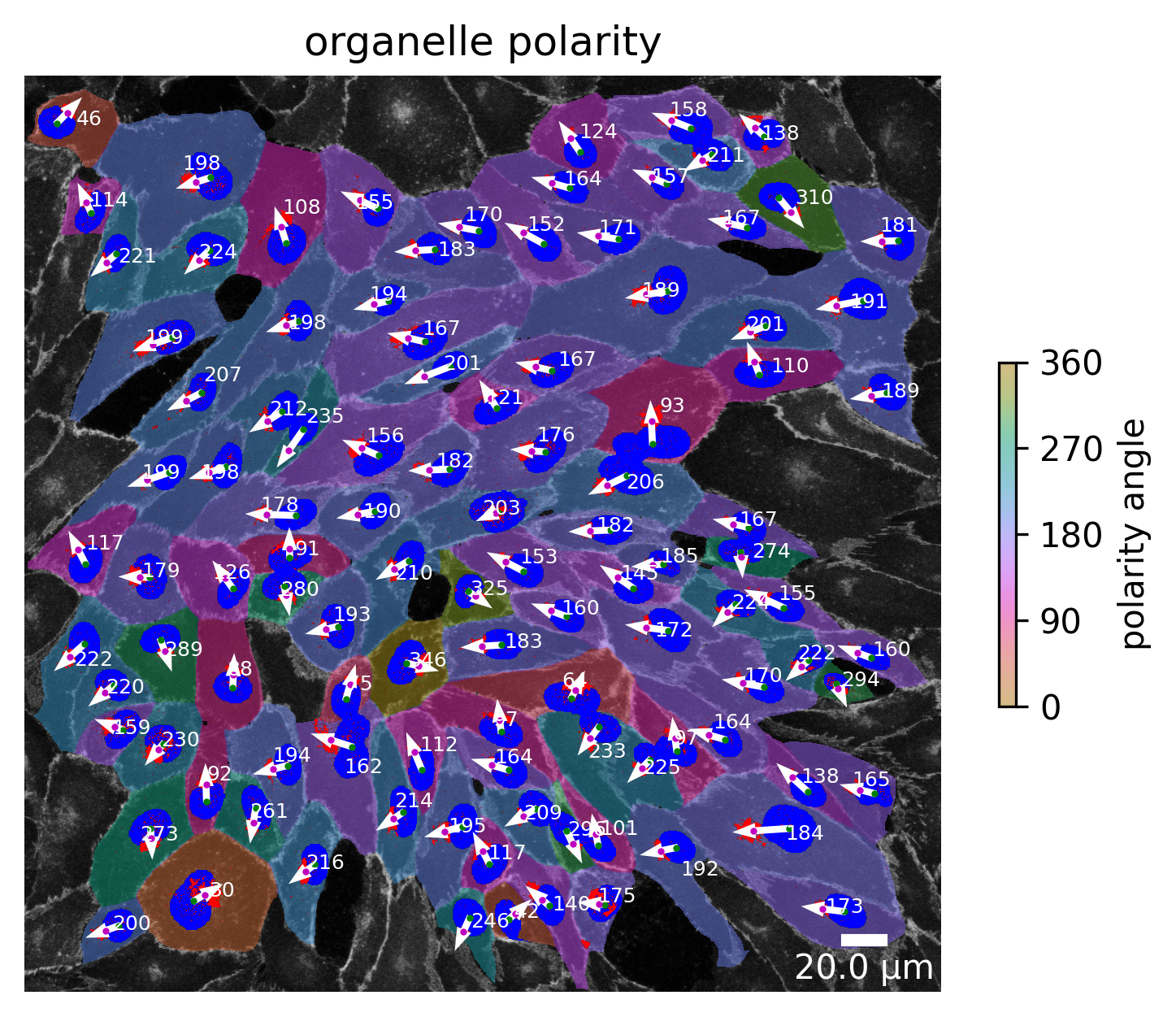

[23]:

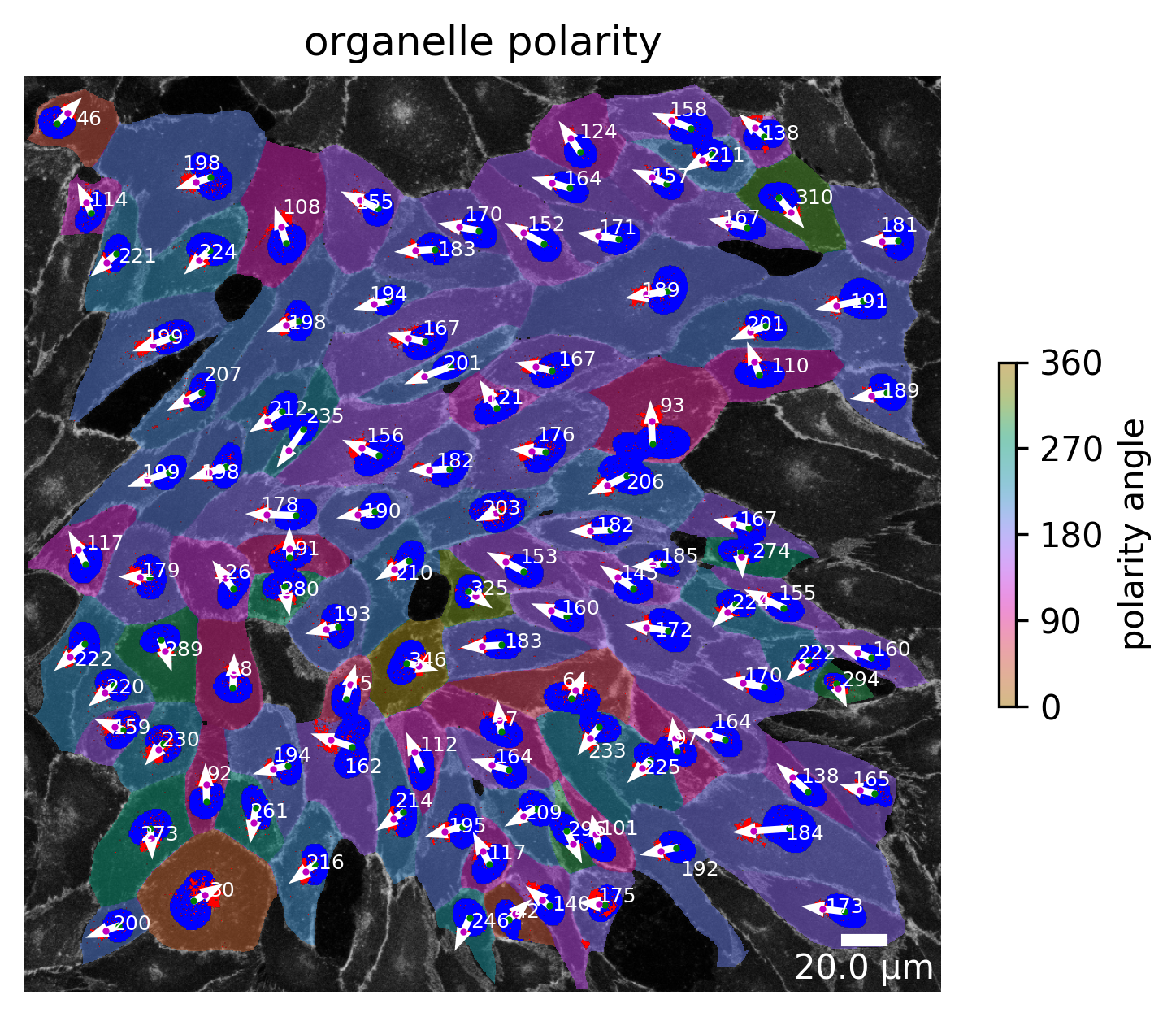

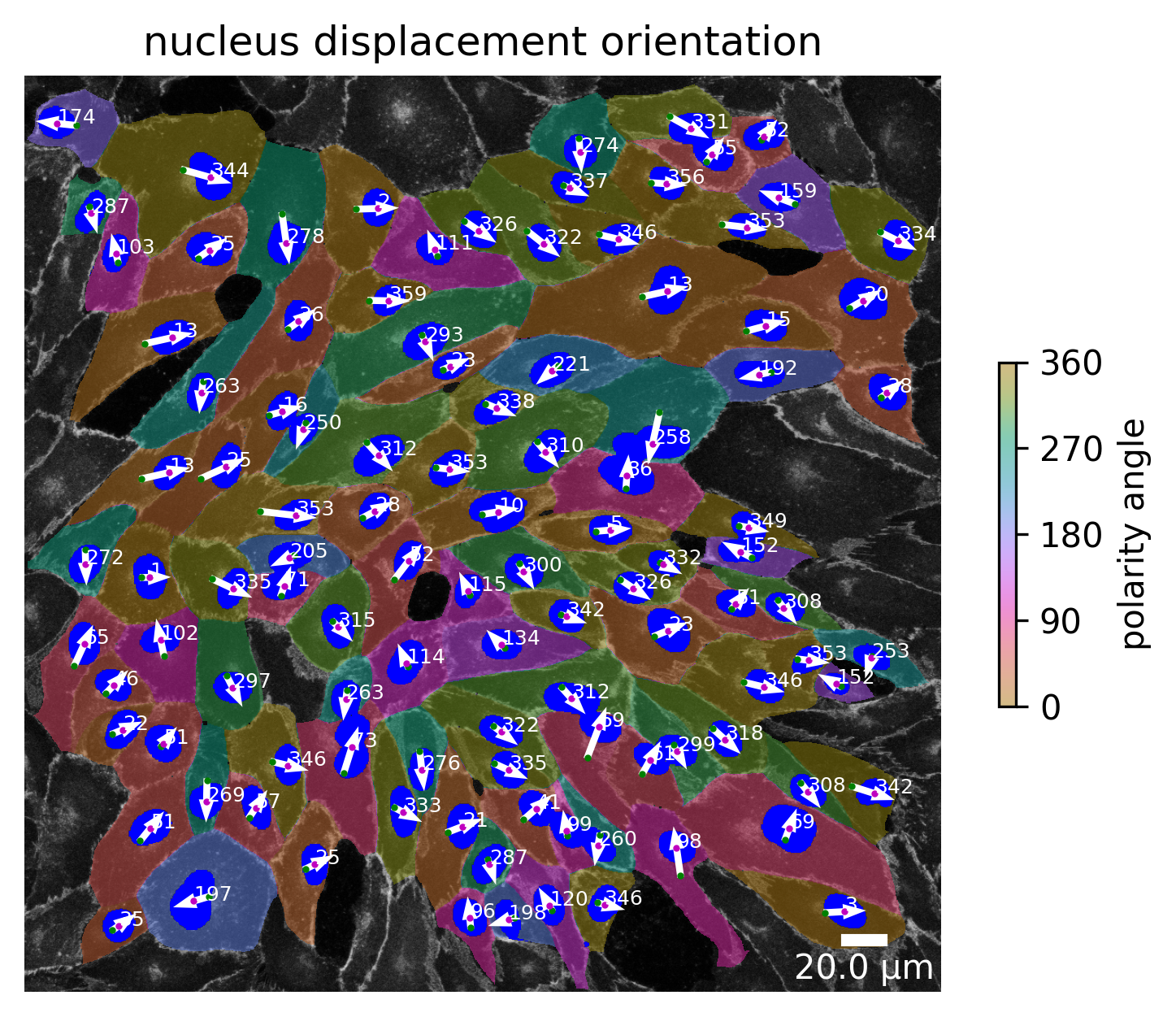

plotter.plot_organelle_polarity(collection, "060721_EGM2_18dyn_02");

Or simply plot the whole collection!

Note

This produces several plots for each image in the collection! Runtime can be high!

[24]:

plotter.plot_collection(collection)

[ ]: